Sharing Our Passion for Technology

& Continuous Learning

This blog provides a recap of our talk delivered at the Iowa Technology Summit, and DevOpsDays Des Moines, and the Midwest Global AI Developer Days conferences.

We are publishing a three-part blog series that is a distillation of that talk; Intro to the Current State of AI/ML, Overcoming Challenges to Adoption, and Using AutoML.

About Us

Source Allies is an IT Consultancy founded in 2002 and driven by an Ownership Mindset. We’re Builders: Data Scientists, Engineers (Data, ML, Software, DevOps), Cloud Architects (AWS, Azure & GCP). We create innovative custom software products with our partners using the simplest architecture. Read more about our super-quants here and here.

Source Allies is an IT Consultancy founded in 2002 and driven by an Ownership Mindset. We’re Builders: Data Scientists, Engineers (Data, ML, Software, DevOps), Cloud Architects (AWS, Azure & GCP). We create innovative custom software products with our partners using the simplest architecture. Read more about our super-quants here and here.

Introduction

It is an exciting time to be interested in using AI/ML to innovate within your organization. True to the title of this blog, we’re going to show you a set of simple entry points to using AI, and specifically, using Machine Learning. Some of these entry points are barely a year old.

However, going back a few years, to get into AI & ML, you really needed to know your stats, linear algebra and calculus ...and be reading up on AI/ML research. That was a significant barrier to getting started, so the AI community started building libraries to abstract AI methods and major cloud platforms started introducing end-to-end AI services, or AI building blocks. For example, today you can send a picture of a driver’s license and passport to an API, and a pre-trained model will send back the text fields of the name, address, expiration, etc.

Many of these end-to-end AI building blocks have been largely general purpose (speech to text, or text to speech, face recognition for identify verification), so to build models specific to your organization, you again needed both data science and ML engineering skills.

The good news is that with the ongoing trend to democratize AI/ML, we’ve been seeing a new category of tools called AutoML, and that’s what we’re going to cover below.

And as a bonus, we’re also going to throw AutoML’s hat in the ring for a predictive data science competition where over 2,500 ML engineers and data scientists competed for prize money. And the results may not be what you’d expect!

What is AI and ML?

Before we get into AutoML, lets define quickly some terminology:

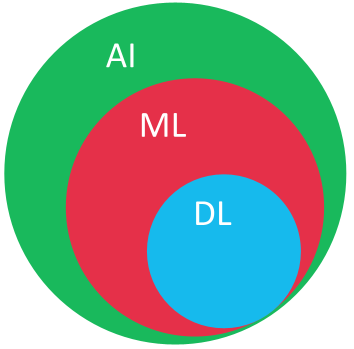

AI or Artificial Intelligence is the umbrella term for automating intellectual tasks performed by humans.

ML or Machine Learning is a subset of AI that enables computers to learn by searching for patterns in data to build logic models automatically. Fraud detection is an example of ML model - you get a call from your credit card company when an unusual purchase is made.

Finally, within ML there is a subset of techniques called Deep Learning or DL where multi-layered neural networks are used to perform tasks like speech and image recognition. If you have used google translate, or photo management tools that can organize pictures of your friends together by recognizing their faces, then that's probably was a DL model.

ML Use Cases

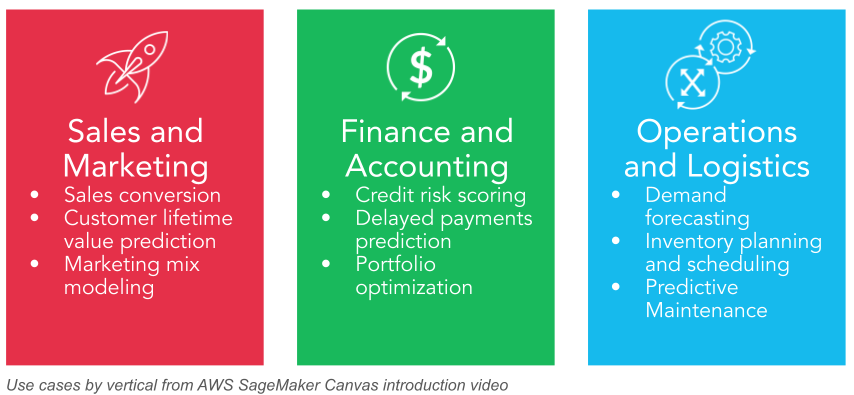

When we talk about use cases for Machine Learning (ML), arguably 80% of use cases involve using either tabular or time-series data. Fortunately, this is the kind of data we all have access to in the enterprise!

Those use cases for these two types of data cover a range of business verticals including Sales, Marketing, Finance, Accounting, Operations and Logistics. Applications in all of these verticals are possible with the entry points to ML that we cover in this blog.

AI and ML: Past and Present

Even though AI is a 60 year old field, one of the astonishing things we’re noticing is the speed at which AI and ML is being adopted by organizations. Just five years ago, Forbes reported that a QUARTER of organizations had incorporated AI into processes and product/service offerings at that time. Then if we fast forward to today, Gartner is projecting that "by the end of 2024, 75% of enterprises will shift from piloting to operationalizing AI."

That’s massive progress, in part to democratization of AI, increasing accessibility of AI tooling, and ever growing AI communities like HuggingFace where many companies and individuals are contributing to the speed of progress. As Dr. Bratin Saha, VP AI & ML at AWS put it, "ML isn't the future anymore. ML is critical to innovation today."

Rapid Advancements

A couple of the leading AI research organizations, DeepMind and OpenAI, can give you a picture of how quickly this progress is happening.

OpenAI’s GPT-3, their third-generation language prediction model, was trained on datasets like all of Wikipedia. Through prompt design, GPT-3 can generate text that of such high quality that it can be difficult to determine whether or not it was written by a human. Interestingly, both of these research organizations have focused in on software development next.

DeepMind has a developed AlphaCode, designed to compete in competitive programming competitions. Furthermore, a descendant of OpenAI’s GPT-3 that’s been additionally trained on code from 54 million GitHub repositories is the AI powering the code auto-completion tool GitHub Copilot, your "AI pair programmer”.

If neither of these are open enough for your liking, well then give the just-released, free and open sourced BLOOM a test drive on your tensorbook.

For now our jobs may get easier, thanks to AI assistance, but according to research done into the code and the security vulnerabilities found in it, our responsibilities will also expand to include keeping an eye on the AI. It's a fascinating time we're living in.

Flywheel of Future Innovation

As the sizes of AI models get larger and larger, there are two positive trends emerging that make them more approachable. The first trend is that training costs appear to be declining at twice the rate of Moore’s Law according to ARK Investments. This plays into the democratization trend of AI where the best AI could be affordable by more than just the very largest companies. ARK Investments predicts that "the cost to train a neural network equivalent in size to the human brain ($2.5B) is likely to drop to $600k by 2030."

The second is that there appears to be tremendous opportunity for innovation to emerge by building on top of very large language models (such as BLOOM) or in the words of Sam Altman, CEO OpenAI :

I think there’ll be a lot of access provided to create the model for medicine or ... whatever. And then those companies will create a lot of enduring value because they will have a special version of it. They won’t have to have created the base model, but they will have created something they can use just for themselves or share with others that has this unique data flywheel going that improves over time and all of that."

Summary

These examples highlight the rapid rate of advancement of AI, how it’s fueled by cloud and hardware acceleration, and how this amazing progress can be built upon to create future innovation. It sets the stage for how a new generation of tooling and entrypoints to AI/ML, such as AutoML, builds upon the advancements of this 60 year old field.

Next Up

Next up in this three part blog series are overcoming challenges to adoption as well as getting hands-on with AutoML.