Sharing Our Passion for Technology

& Continuous Learning

GenAI in Production: Avoiding the POC Purgatory

How did we get here?

In my job, I get the opportunity to see how many different companies are thinking about GenAI. Whether that be "Top down", or "Driven from the trenches", or "Our CEO vibe coded over the weekend and now we are changing our entire business model". Regardless of which it is (hopefully not the last one), they tend to fall flat more often than not. But why?

What is causing successful businesses to prioritize a new technology but never realize production value?

More importantly, why have our client projects consistently reached production when industry data shows vastly different trends?

The answer isn't better models or a bigger budget. We've identified a few techniques we employ on every GenAI client project that have allowed us to overcome many of the hurdles causing companies to get stuck in POC (Proof of Concept) purgatory. But before I go into those, let's do some fact checking!

Does the Industry See the Same Trend as I Do?

While I have had plenty of anecdotal evidence backing this phenomenon up for me, I hope you dont just take my word for it. It's all about the data!

If we look back a little over a year ago we were already getting data that...

"Over 85% of AI projects [are] failing to reach production"

from the Data+AI Summit 2024: An Executive Summary for Data Leaders.

That is a staggering number for what the business world is touting as "the most transformational technology that everyone is currently taking advantage of". After seeing this, I assumed much of it was due to the silver-bullet promises that often come with the early stages of the Gartner Hype Cycle, and as time went forward, this would slowly fall and become a more normal percentage.

Fast forward to July 2025 and we have the MIT State of AI in Business 2025 report telling a different story...

"Sixty percent of organizations evaluated such tools, but only 20 percent reached

pilot stage and just 5 percent reached production"

... we went the wrong direction. It seems that 95% of projects are now atrophying before production. That's no good.

Thankfully, it looks like MIT has been seeing many of the same hurdles we have, so let's dig in to how we can actually get GenAI into production and start getting value out of it, instead of spinning (very expensive) wheels.

Start with the Problem

This seems like a given, but when people are constantly asking for progress in a new technology, the basics are often the first thing to suffer. Every GenAI project we do starts with a discovery phase that works with both the business leaders and the expected day-to-day users to ensure a few different things:

- How will this fit into our current processes? (Value stream mapping)

- Will users want to use this tool? (UX and "ground truthing" the problem)

- Is what we are building potentially valuable? (ROI forecasting)

- Is the direction we are going creating foundational capabilities we can build on later? Or one-off silos? (Architecture review/proposal)

- Do we have an opportunity to build into existing workflows instead of creating "Yet Another Tool"?

- Is GenAI even the right fit for this problem?

Surprisingly for a software consulting company, we LOVE when these questions lead us to the conclusion that a particular project has no legs. We avoid wasting our time, the client's money, and can pivot to building something that is actually valuable for everyone involved.

"Despite 50% of AI budgets flowing to sales and marketing (from the theoretical estimate with executives),

some of the most dramatic cost savings we documented came from back-office automation.

While front-office gains are visible and board-friendly, the back-office deployments

often delivered faster payback periods and clearer cost reductions."

— MIT's State of AI in Business 2025

Remember...

When you don't start with your problem, you can't define success upfront.

When you can't define success upfront, then the users, stakeholders, and various business partners end up with conflicting expectations.

When you have conflicting expectations, the project gets bogged down in the later stages and leads to scope creep, rework, and ultimately POC Purgatory.

Measure!

This goes hand in hand with defining success upfront. While it does apply to business metrics like identified KPIs, those are not unique to a GenAI project. What is more unique is the practice of "Evaluations" and ultimately "Metrics Driven Development (MDD)".

After we define a business problem we work with the Subject Matter Experts (SMEs) to create what are called "Ground Truths". The types of questions and answers vary depending on the type of solution we are building, but in general, they are a codified definition of success. Then, we can break these ground truths into 2 types of evaluations:

1. Objective Evals

There is a defined "correct" that everyone can agree on and would grade the same. Think of this like a multiple choice test, or in our case, something like a GenAI data structuring pipeline where we can expect that the structured data should look exactly like this. We essentially give our GenAI solution a test, then grade it with our predefined answer sheet to determine if we are on track on not. When possible, we opt for these kinds of evaluations.

2. Subjective Evals

This is usually a technique used on open-ended questions, or in our case, chat interface interactions where we expect both free form input and output. Even if you have a defined "correct" answer, grading is difficult, and would not be standard even if you asked multiple people to score it. This is where LLM-as-a-Judge evaluations come in. We can leverage LLMs to do something they are already very good at, categorizing data. We can then use that categorized data to derive scores based on what items are present in our question, ground truth, generated answer, and even provided context.

How Do We Use Them?

Source Allies has spent a lot of time investing in understanding how to better measure GenAI solutions. We've found that having a standardized measurement you can point to and say "Our accuracy is going up", or "Our accuracy is going down" is invaluable for navigating stakeholder conversations, both business and legal. Often the difference between going to production and getting stuck is simply a plain way to say "We did our due diligence, and here is the result".

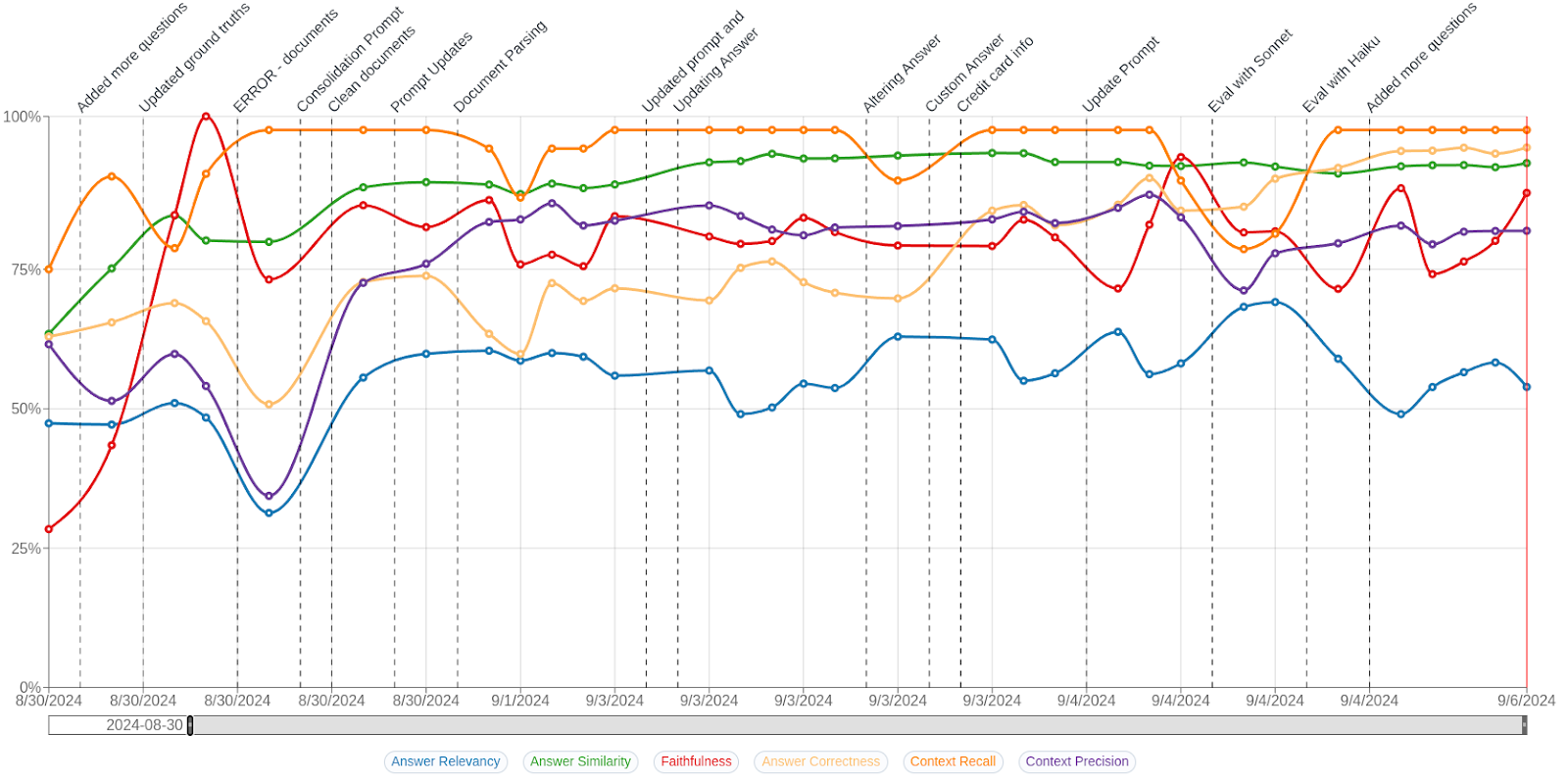

Here is an example of what an accelerated evaluations metric timeline looks like:

Notice how the Answer Correctness metric (The yellow line) improves over iterations as we make changes to things like document ingestion, document retrieval techniques, and even document inference and agentic prompts!

There are so many metrics available for different types of GenAI patterns. Our favorites are Answer Correctness and Faithfulness, but we measure many more! If you want to get a better understanding of the different types of measures, and some ways to calculate them, check out Ragas. While we've built our own evaluation framework optimized for our patterns, Ragas documentation provides an excellent introduction to evaluation concepts.

Defining Success

A codified definition of success isn't just for grading individual questions, it sets your production deployment bar.

Before any development starts, we require stakeholders to commit to specific thresholds: "We go to production at 85% Answer Correctness and 90% Faithfulness." This agreement transforms evaluations from technical metrics into binding go/no-go criteria.

Here's the pattern we've seen repeatedly: stakeholders who can't align on success criteria in week 1 will still be debating them in month 6, except now you've invested significant time and budget chasing an undefined target.

If you want to see an example of evaluations in action, check out this AWS Partner Blog where we built an underwriting assistant for a crop insurance company interfacing with 500,000+ pages of information per reinsurance year.

There's No "One Tool to Rule Them All"

Just because your enterprise purchased Office Copilot doesn't eliminate the need for specialized or custom tools leveraging GenAI functionality. Likewise, if you invest in creating a custom agentic tool for your underwriters, you should not expect it to fulfill the general purpose needs of a company-wide chatbot.

While GenAI has many unique qualities, at the end of the day, it is simply software we can use to solve problems. Just like all software, there are times when buying a product or a SaaS platform makes the most sense, but there are also times when building custom software is the right answer.

We work with many companies that started down the path of "we will use our purchased AI tool for everything", only to find out it was fantastic at some things, but lacked the ability to perform other tasks they wished to use it for. I typically break down these domains into a couple of categories.

1. Personal Capability Uplift

This is the domain of many general purpose tools like Office Copilot. It is fantastic at making individual people more efficient at many of their day to day tasks like certain kinds of document access, writing emails, and sometimes even things like writing software. The more familiar with AI and prompt engineering a person is, the more likely they will be able to use this type of tool more effectively.

"Consumer adoption of ChatGPT and similar tools has surged, with over 40% of knowledge

workers using AI tools personally. Yet the same users who integrate these tools into

personal workflows describe them as unreliable when encountered within enterprise systems."

— MIT's State of AI in Business 2025

2. Organizational Capability Uplift

These tools excel at helping an entire organization or organizational unit collectively gain efficiencies or transform their workflows. They are often custom tools, and what Source Allies specializes in building, but they can also be specialized platforms in your industry tailored to a certain problem. What really sets these tools apart is the ability to change them to match the specific needs of a task, and often integrate with existing workflows to the point where users need to know nothing about AI or prompting to gain immediate benefit from them.

"The standout performers are not those building general-purpose tools,

but those embedding themselves inside workflows, adapting to context,

and scaling from narrow but high-value footholds."

— MIT's State of AI in Business 2025

So What Should You Do?

In the future, as with all software, it will not matter if a tool is an "AI" tool or not. It will simply matter if it solves your problem effectively. Yes, your organization needs an AI strategy, but make sure you are not creating a bottleneck by centralizing a problem that does not need to be centralized. Establish guidelines for your developers and business units to enable them to problem solve within pre-approved boundaries. The people closest to the work usually understand the problem statement the best.

Takeaway

Companies are stuck in POC Purgatory because they're skipping the fundamentals:

- Starting with solutions instead of problems

- Deploying without measurable success criteria

- Forcing general-purpose tools into specialized workflows

The MIT report confirms this pattern, finding "high adoption, but low transformation" across 95% of custom enterprise AI initiatives.

The Data is Clear

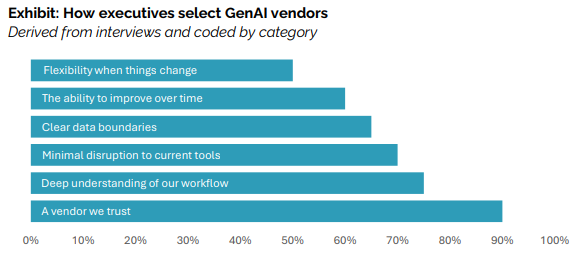

Organizations that invest in specialized tools rather than building general chat bots internally see 2x higher success rates (66% vs 33% according to MIT). But buying alone isn't enough, you need a partner who understands your workflows, measures success objectively, and treats AI deployment as an iterative learning process, not a software installation. As MIT deems it, "A vendor we trust".

Source Allies is a trusted partner as companies are going through their Generative AI decision making processes, and sets enterprises up for success. Our core competencies of problem solving, ownership, and being builders who teach are more applicable than ever to the GenAI landscape.

If you would like to find out more about our successful production deployments at clients, check out the AI category of our Case Studies. We would love to partner with you on your GenAI journey!