Sharing Our Passion for Technology

& Continuous Learning

This blog is part of a series on end-to-end (E2E) testing in Cypress. In this post, we will take a look at how our team uses Cypress to benefit our workflow and increase the safety in which we deploy new features. This information is available in this presentation I give on the topic. You can also check out a demo application with Cypress in this repo.

What is Cypress?

Cypress is "fast, easy and reliable testing for anything that runs in a browser". It is batteries-included so it comes fully baked-in with tons of features and perks. It is also open-source, has a strong user community, and great documentation.

Cypress accesses the application via the browser, therefore it is most suitable for end-to-end (E2E) acceptance tests or user interface (UI) tests. Cypress can be used to unit test your components with some home-brewing. Cypress also allows for full-on traffic control of API calls, so it can also be used for API testing.

Levels of Our Application that Cypress Tests

Our team uses Angular, which comes with Jasmine/Karma for unit testing and Protractor for E2E tests. We chose to replace the former with Jest since our team had more experience with it. We chose not to use Protractor because of past experiences with Selenium testing.

TestBed is the Angular testing way to set up the modules, providers, and child components necessary to shallow mount a component. It is necessary in order to do any UI testing on a unit test level. We did not enjoy the developer experience that TestBed provides for UI testing. It was difficult to set up (see code block below) and cumbersome to interact with the document object model (DOM - the term used for the outline of HTML elements on a webpage).

We use Jest to unit test our components. Instead of doing these tests with TestBed, we reserved Jest unit testing for TypeScript logic only. This means any logic around function calls and flags were tested, but their connection to the template or DOM was not at this level. This is where we leveraged Cypress tests

Our Team Workflow

The tech ecosystem regarding QA requirements and dynamics is complex. Every team or project will vary in their approach as they all have unique needs. QA can be embedded on a team or separated as a different group. Developers can write automated tests or QA automation engineers can. Below is the workflow that our team uses.

A quality assurance (QA) team member writes test cases and manually tests, while our developers write automated tests. We've found that writing E2E tests have bolstered communication on our team, particularly tightening our dev-to-QA feedback loop.

At the beginning of each story, developers and testing come together to whiteboard a story around acceptance criteria. If needed, developers will have a separate technical implementation whiteboarding session to further refine their approach.

When the developers are wrapping up feature work, they meet again with QA to discuss what was implemented. At this point, test cases have been written, are refined, and the developers and QA can decide which E2E test cases should be automated via Cypress.

Even if test cases are automated, at feature implementation QA still rigorously manually tests them. They also check the effectiveness of the Cypress tests against their manual testing for a given case. From that point, the tests are marked as "automated" and Cypress can act as our regression test suite. This allows us to focus on smoke and exploratory testing, rather than redoing tedious and manual regression testing.

Integration with Build and Deploys (working towards CI/CD)

Let's get some definitions set out around builds and deploys, as it can be a bit technical and confusing! First, for those that don't know, CI/CD continuous integration/continuous deployment. This refers to the practice of getting code into master and deployed to your environments faster and in smaller chunks.

I will refer to a build or build artifact as the code that is going to be released to an environment. The release process involves deploying code, therefore another term for a release is a deploy. Both a build and a release have the possibility to fail (red) or pass (green). Failing means that the next step will not be performed.

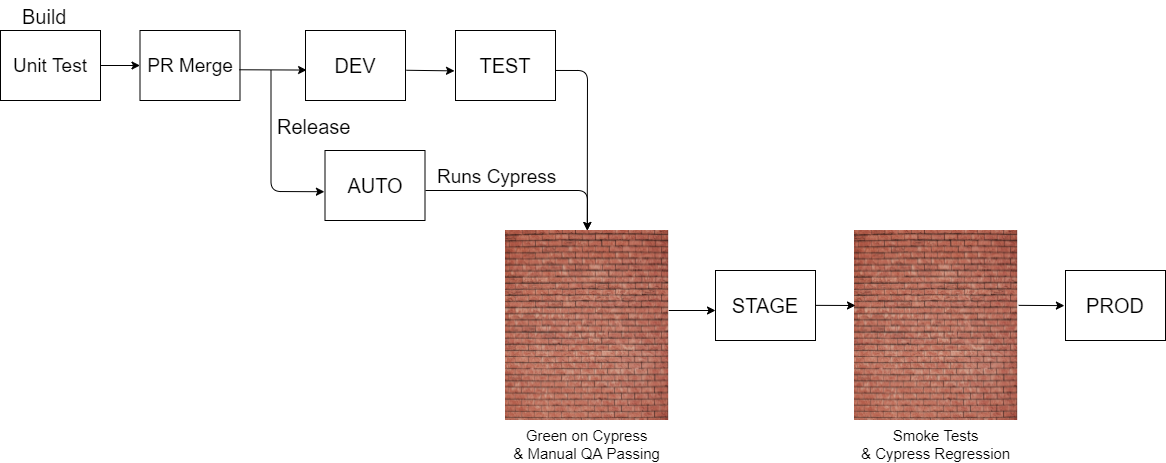

The deploy process releases a build to an environment. An environment consists of a group of resources that are required to run an instance of our digital application (distinct URL, database, API server, UI server, functions, etc). The five environments we deploy to builds are DEV, TEST, AUTO, STAGE, and PROD. DEV is for developers to check their work, TEST is for QA, AUTO is for our E2E tests, STAGE is for our business users, and PROD is for our customers to use.

Our deploy process as it pertains to Cypress is that we run Cypress tests on deploy to AUTO. The tests are required to pass in AUTO (green) in order to go to STAGE. You can see the details of this in the figure below and in the text that follows:

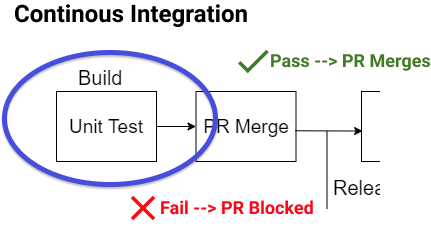

When we create a pull request (PR) to master, we are requesting new code to be merged and deployed. At this point, a build happens. As part of our build process, before any releases happen, we run integration tests. These include unit tests in Jest on the frontend, unit tests in MSTest on the back end, and SpecFlow acceptance tests on our API. If these fail, the build does not release to any of our environments.

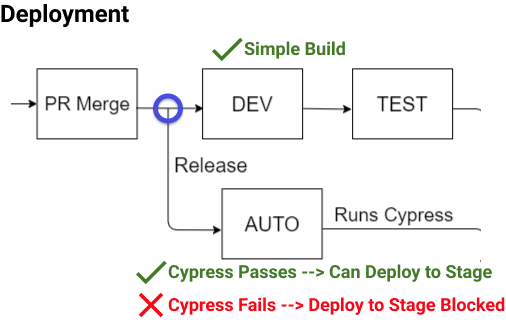

After building, we deploy to DEV and AUTO environments concurrently. If the DEV release passes (green), the build also goes to TEST. These releases stand up resources and deploy our new code — nothing special.

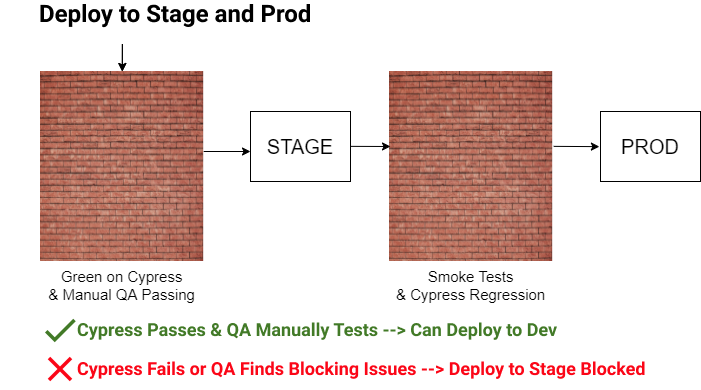

The AUTO release does the same release as DEV and AUTO with an additional step. Once the new code has been deployed to the environment, we trigger an additional build that runs all of our Cypress E2E tests against the AUTO environment. If the tests fail, the release fails (red) and rolls back to the previous build. If the tests pass, the release passes (green).

For tests that fail, we capture screen-shots. Regardless of tests passing or failing, videos are captured of each test. Both of these are saved to the build artifact after Cypress tests are run.

In order for a build to go to STAGE, we require two things: Cypress tests passing in auto (green) and QA signing off on all features merged. Once a release is in STAGE, it can go to PROD if it has been signed off by our business counterparts.

Working with Feature Flags

We use Launch Darkly for feature flags to help remove barriers to releasing partial features. Given our deployment and QA workflows, we needed to figure out how to integrate feature flags into our Cypress testing flow. While it is possible to toggle feature flags for certain Cypress tests, we decided to create a workflow that was suitable for us. What we ended up agreeing on as a dev team is:

-

Start with a feature flag on in DEV/Local for initial feature development

-

We submit a PR to merge the feature with the flag off in AUTO

-

We update Cypress tests locally with the feature flag on

-

When the Cypress PR merges we turn the feature flag on in AUTO

-

The Cypress tests pass in AUTO with the new feature 🎉

I will go into more detail on this in future blogs.

Conclusion

Cypress has brought a lot to our team and our process. It has encouraged our team to communicate feature expectations. It has provided us with a tool to catch defects as they are being generated. Possibly the biggest value, Cypress serves as a regression test suite. This helps us feel assured that we have not negatively affected any previous features when implementing new ones.

Cypress also offers a breadth of helpful features allowing developers to dive into E2E testing without having to create a Page Object Model or write wait helpers. Also, the value of the interactive test runner, screen-shot, and video output makes this tool highly valuable to non-developer members of our team.