Sharing Our Passion for Technology

& Continuous Learning

Solr – features and configuration details

Solr is a standalone enterprise search server with a web-services like API. You put documents in it (called "indexing") via XML over HTTP. You query it via HTTP GET and receive XML results. Some of the main features of Solr are:

- Advanced Full-Text Search Capabilities

- Facilitates Faceted browsing: Narrowing down Search results by category (e.g., Manufacturer, price or author)

- Optimized for High Volume Web Traffic

- Standards Based Open Interfaces - XML and HTTP

- Comprehensive HTML Administration Interfaces

- Server statistics exposed over JMX for monitoring

- Scalability - Efficient Replication to other Solr Search Servers

- Flexible and Adaptable with XML configuration

- Extensible Plugin Architecture.

Solr Uses the Lucene Search Library and Extends it! More details about the features of solr can be found here.

Indexing databases using Solr: Step 1: Download Solr and set the directory where it is extracted as solr.solr.home

Step 2: Deploy solr.war in the application server.

Step 3: Indexing In Solr, indexing and searching are initiated by sending HTTP requests to the web application. It uses the Data Import Handler for handling HTTP requests related to database indexing. Two commands: Full-import: To do a full import from the database and add to Solr index Delta-import: To do a delta import (get new inserts/updates)

- Create a db-data-config.xml and specify the location of this file in solrconfig.xml under DataImportHandler section

<requestHandler name="/dataimport">

<lst name="defaults">

<str name="config">/home/username/data-config.xml</str>

</lst>

</requestHandler>

-

Mention connection info in

db-data-config.xml: <dataSource name=”ds1” driver="com.mysql.jdbc.Driver" name=“data-src1” url="jdbc:mysql://host:port/dbname” user="db_username" password="db_password"/> - Open the DataImportHandler page to verify if everything is in order http://host:port/solr/dataimport

- Write full-import query in db-data-config.xml.

- Write delta-import query in db-data-config.xml.

- Use http://localhost:8983/solr/db/dataimport?command=full-import to do a full-import.

- Use http://localhost:8983/solr/db/dataimport?command=delta-import to do a delta-import.

Doing a Full import:

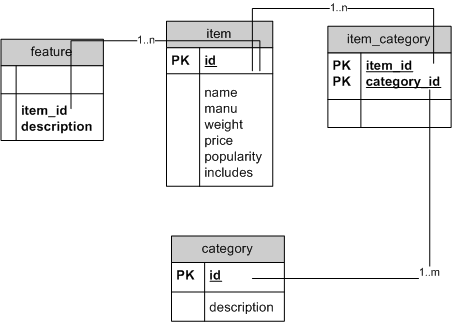

Sample db-data-config.xml would be something like this:

<dataConfig>

<dataSource driver="org.hsqldb.jdbcDriver" name=“ds1” url="jdbc:hsqldb:/temp/example/ex" user="sa" />

<document name="products">

<entity name="item" dataSource=“ds1” query="select name,id from item">

<field column="ID" name="id" />

<field column="NAME" name="name" />

<entity name="item_category" query="select CATEGORY_ID from item_category where item_id='${item.ID}'">

<entity name="category" query="select description from category where id='${item_category.CATEGORY_ID}'">

<field column="description" name="cat" />

</entity>

</entity>

</entity>

</document>

</dataConfig>

Use http://localhost:8983/solr/db/dataimport?command=full-import to do the full import.

Doing a Delta import: When delta-import command is executed, it reads the start time stored in conf/dataimport.properties. It uses that timestamp to run delta queries and after completion, updates the timestamp in conf/dataimport.properties. This feature requires that the database has a field that has a timestamp of when the record was last modified.

For setting up Delta queries, in db-data-config.xml, write delta queries as shown below:

<entity name="item" pk="ID"

query="select name, id from item"

deltaImportQuery="select name,ID from item where ID=='${dataimporter.delta.id}'"

deltaQuery="select id from item where last_modified > '$ {dataimporter.last_index_time}'">

Use http://localhost:8983/solr/db/dataimport?command=delta-import to do the delta import.

Further details can be found here

Step 4: Configuring schema.xml Specifies the schema of the Solr index. Define the new fields added to the schema.xml file E.g.,

<field name=“id” type=“text” stored=“true” indexed=“true” /> <field name=“temp” type=“sfloat” stored=“true” indexed=“true” />

Integrating Solr and Nutch It would be nice to index unstructured data using Nutch, structured data using solr and search all the data together using Solr. To do this:

- Deploy the Solr web application.

- Index structured data using Solr's full-import and delta-import.

- Crawl unstructured data with Nutch until the merge segments stage. (As we need only the segments not the index)

- Index all contents from all Nutch segments to Solr.

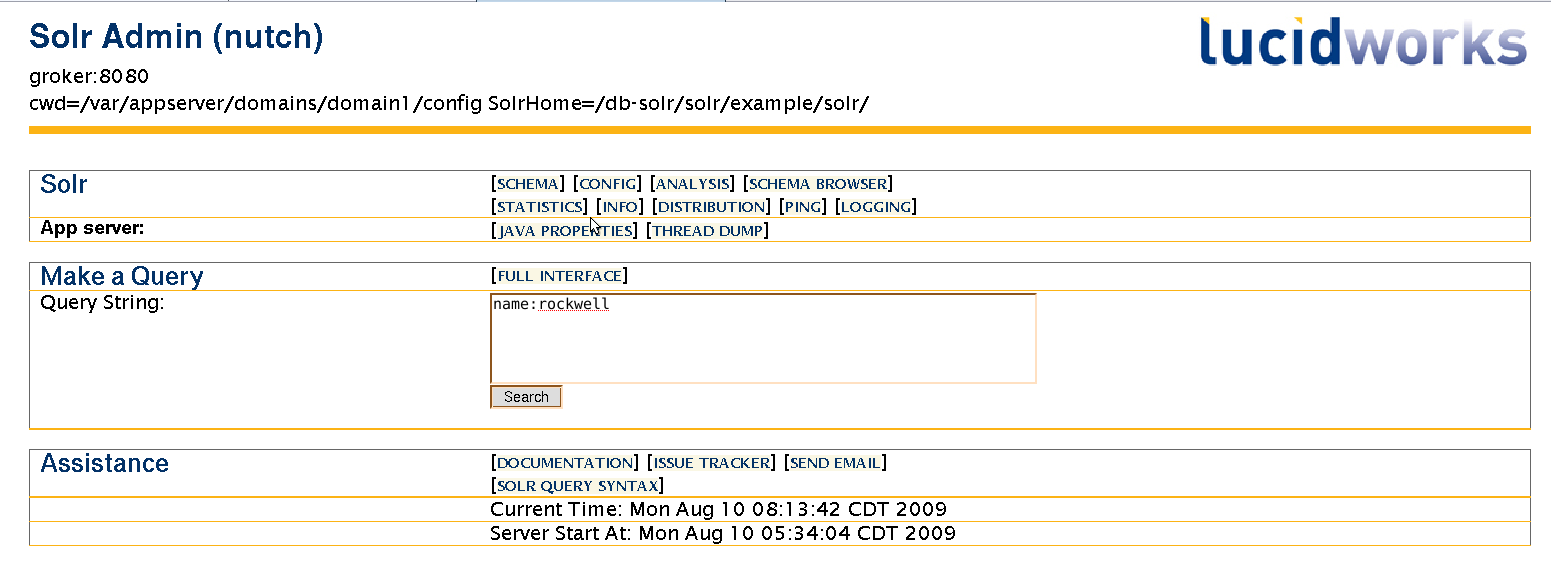

- Search through Solr admin ui http://hostname:port/solr/admin

bin/nutch solrindex http://hostname:port/solr/ crawl/crawldb crawl/linkdb crawl/segments/*

where crawl is the crawl folder created by Nutch